PySpark - Machine Learning with Gradient Boost and Random Forest Classifier

PySpark ML with TF-IDF, CrossValidator, ParamGrid, DecisionTreeClassifier, RandomForestClassifier, GBTClassifier, MultilayerPerceptronClassifier.

Title: PySpark - Machine Learning with Gradient Boost and Random Forest Classifier

Description: PySpark ML with TF-IDF, CrossValidator, ParamGrid, DecisionTreeClassifier, RandomForestClassifier, GBTClassifier, MultilayerPerceptronClassifier.

Source code link: https://gitfront.io/r/pranav/AGyTKh2Lq4kd/PySpark-Machine-Learning-with-Gradient-Boost-and-Random-Forest-Classifier/

Video Link: https://youtu.be/G-MCYTs8FpQ

Introduction & Methods

This report investigates the impact of ensemble methods, specifically bagging and boosting, on machine learning models, using a flight data delay dataset. It compares these methods against a neural network model, emphasizing data preparation, model training, and validation across various metrics.

Data Description

The report utilizes the Air Flight Dataset, detailing flight records, cancellations, and delays per airline from January 2018, derived from the TranStats "On-Time" database.

Architecture

The study employs PySpark libraries, focusing on a Decision Tree, Multi-Layer Perceptron neural network, Gradient Boosting, and Random Forest classifiers. It outlines the code architecture and pseudo-code for data cleaning, balancing, preprocessing, and model training.

Data Preprocessing

Describes the steps taken for redundant column removal, binary recoding of delay and cancellation indicators, normalization of textual data, and balancing the dataset through undersampling.

Results

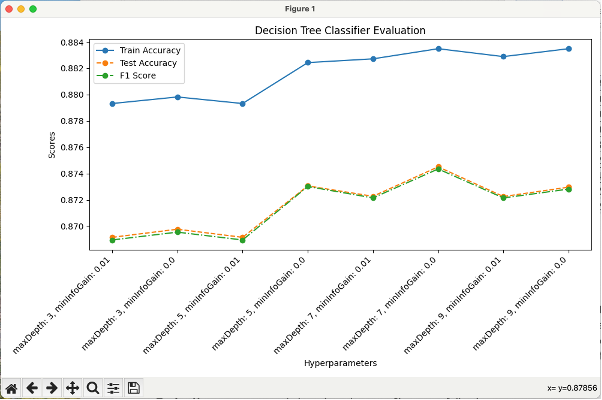

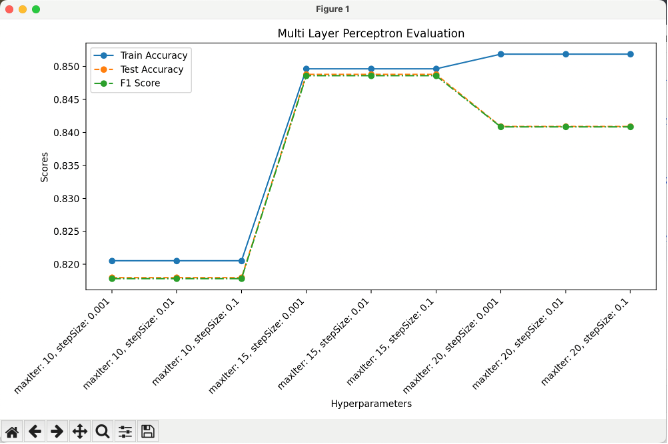

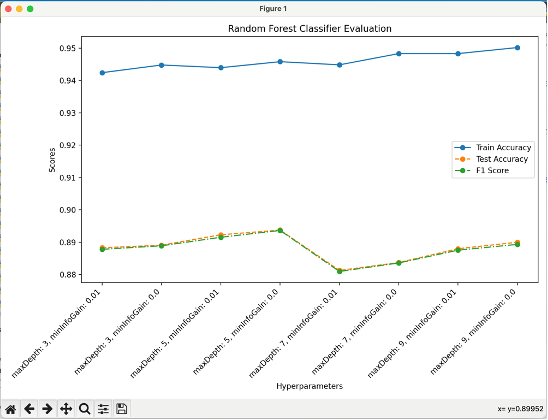

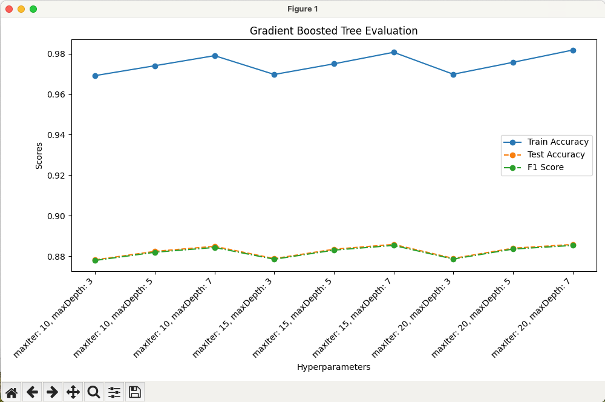

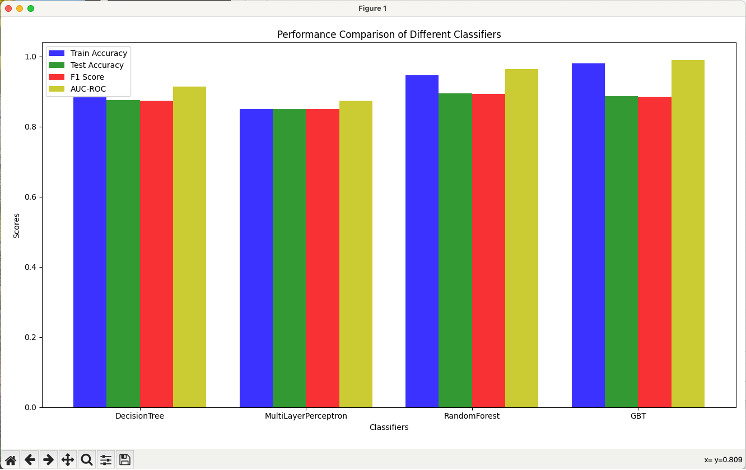

Presents detailed results of model training and evaluation for DecisionTreeClassifier, MultiLayerPerceptronClassifier, RandomForestClassifier, and GBTClassifier, including hyperparameter tuning and the effects on accuracy, F1 scores, and ROC-AUC.

Decision Tree Classifier Evaluation

Multi Layer Perceptron Evaluation

Random Forest Classifier Evaluation

Gradient Boosted Tree Evaluation

Performance Comparison of Different Classifier

Conclusion

The study concludes that ensemble methods, particularly bagging and boosting, outperform the neural network model in predicting flight delays, with significant improvements in accuracy and stability. It highlights the importance of thorough data preparation and model optimization.